Overview

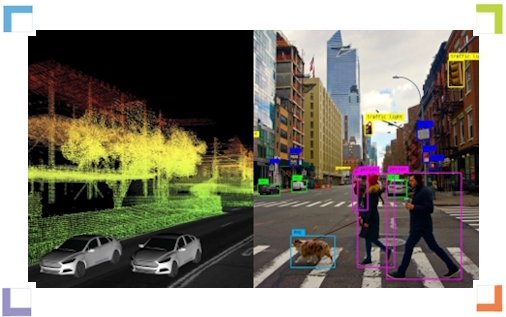

A recurring topic in autonomous driving is whether LiDAR or cameras are the better primary sensor. The debate reflects two different technical approaches. To understand why the discussion persists, it is useful to review the principles behind each approach and their respective strengths and limitations.

Perception's Role in Autonomous Driving

Autonomous driving transfers driving capability and responsibility from the human to the vehicle, and it generally consists of three core components: perception, decision, and control. The perception layer functions like human eyes and ears, using on-board sensors such as cameras, LiDAR, millimeter-wave radar, and ultrasonic sensors to observe the environment, collect surrounding data, and forward it to the decision layer. The decision layer acts like a brain, processing incoming data via the software stack, chips, and compute platform to produce driving commands. The control layer acts as the vehicle's limbs, executing commands that affect propulsion, steering, lighting, and other actuators.

Perception is a prerequisite for intelligent driving and directly affects driving safety through detection accuracy, coverage, and latency. Data from the perception layer also shapes decisions and downstream control actions, making its role critical.

Two Camps: Pure Vision vs LiDAR-Centric

The field broadly divides into two camps: the pure-vision camp and the LiDAR camp.

The vision camp argues that humans drive using visual information processed by the brain, so cameras combined with deep learning, neural networks, and sufficient compute can achieve similar capabilities. Tesla recently promoted a pure-vision approach with its FSD Beta and has shifted away from millimeter-wave radar. Baidu also published an L4-level pure-vision solution called Apollo Lite.

The LiDAR camp, represented by companies such as Waymo in the Robotaxi domain, uses mechanical LiDAR, millimeter-wave radar, ultrasonic sensors, and multiple cameras to achieve L4-level commercial deployments.

How Each Sensor Type Works

Cameras are passive sensors that capture high-frame-rate, high-resolution images at relatively low cost. Their imaging quality depends heavily on ambient lighting, and they perform less reliably in adverse lighting or weather conditions.

LiDAR is an active sensor that emits laser pulses and measures reflected signals to obtain depth information. LiDAR provides high precision, long range, and strong anti-interference capability. However, LiDAR point clouds are sparse and unordered compared with images and cannot directly capture color or texture. For these reasons, LiDAR is typically used together with other sensors to provide complementary information.

Industry Adoption and Trends

Many automakers and suppliers have adopted LiDAR in production vehicles or upcoming models. Examples include Volvo and Luminar announcing production plans, and several Chinese manufacturers selecting LiDAR as standard equipment for certain models. 3D LiDAR plays an important role in vehicle localization, path planning, decision making, and perception. Between 2022 and 2025, a majority of OEMs are expected to start deploying LiDAR in production.

From a technical perspective, image sensors provide data that closely resembles human vision and require heavier software processing. Cameras are inexpensive and easier to integrate to automotive standards, but performance degrades in darkness or adverse weather. Pure-vision solutions therefore rely heavily on advanced algorithms to convert 2D camera images into accurate 3D models.

LiDAR offers precise distance measurements and robust performance across different lighting conditions. Current LiDAR types include mechanical, hybrid solid-state, and pure solid-state. Mechanical LiDAR is mature and provides 360-degree scanning but is bulky and costly. Hybrid solid-state designs can be more cost-effective for large-scale production but have limited field of view. Pure solid-state (including OPA-based phased-array and flash LiDAR) is a likely future trend but still needs further technical development for mass production. Among current approaches, hybrid solid-state using MEMS scanning appears to be a mainstream path toward front-end automotive production.

Commercial and Safety Considerations

At present, LiDAR-based perception generally offers stronger sensing performance than pure vision, which has led many OEMs and Tier-1 suppliers to adopt LiDAR to accelerate production and reduce reliance on complex vision algorithms, mapping, and localization. Critics of LiDAR often point to cost; proponents of pure vision emphasize scalability and data-driven algorithmic improvements.

Recent developments have reduced LiDAR cost significantly as the technology shifts toward solid-state designs and as the LiDAR supply chain matures. Some reports suggest per-unit LiDAR costs can fall dramatically compared with historical prices, driven in part by manufacturers across the supply chain including Hesai, Livox (DJI), Huawei, RoboSense, and Leishen.

Because LiDAR enables faster deployment with higher sensing redundancy, many OEMs choose LiDAR-based solutions for safety and regulatory reasons, especially when L4-level or commercial robotaxi operations are the target. Pure-vision approaches require large amounts of user data and significant in-house software development to achieve the same level of safety across all scenarios; this raises barriers for traditional automakers that lack large-scale data and software teams.

Strategy Examples from Industry Players

Tesla emphasizes a vision-first strategy, minimizing hardware complexity and relying on software and large-scale data for perception. Tesla uses techniques such as "shadow mode" where the vehicle compares driver actions with model predictions to gather labeled training data when discrepancies occur.

Baidu and Mobileye illustrate mixed strategies. Baidu has developed ANP (Apollo Navigation Pilot), a visual solution for production vehicles, while its Robotaxi efforts combine LiDAR and vision to enhance reliability. Mobileye has a camera-centric architecture for many OEMs but also partners with LiDAR vendors for Robotaxi deployments to raise overall perception robustness.

Cost Dynamics and Market Impact

LiDAR cost used to be prohibitively high, with single units costing tens of thousands of dollars. As LiDAR technology moves toward solid-state architectures and as manufacturing scales, unit costs have fallen substantially. This change has influenced some automakers to reconsider earlier statements against LiDAR integration. Elon Musk has argued that cameras provide far more information per second than radar or LiDAR and that improving vision is a better allocation of effort. Nevertheless, evidence suggests that many companies still use LiDAR during development as a source of high-quality depth labels for training and validation of vision models.

Sensor Redundancy and Autonomy Levels

For vehicles offering L2 functionality and above, industry practice favors multiple sensor types and redundancy to ensure safety. Typical configurations for L2 systems may include 9 to 19 sensors, such as ultrasonic sensors, long- and short-range radars, and surround-view cameras. Moving toward L3 may require 19 to 27 sensors and could include LiDAR and high-precision navigation and localization systems. In current market offerings, many new vehicles are equipped with numerous cameras, millimeter-wave radars, and ultrasonic sensors.

Conclusion

Safety and reliability are the fundamental requirements for vehicle automation. Given current technology, multi-sensor redundancy remains a pragmatic approach to increase robustness across varied scenarios. Both pure-vision and LiDAR-centric strategies have their advantages and trade-offs. The prevailing industry approach is pragmatic: use multiple complementary sensors for redundancy, continue improving vision algorithms, and reduce LiDAR costs through technology and supply chain advances. As perception algorithms and sensor hardware evolve, different technical routes will likely coexist or converge depending on use case and deployment requirements.