One persistent topic in autonomous driving is whether LiDAR or cameras provide superior perception. To understand why this debate exists, we need to examine the principles behind the two approaches and their respective strengths and weaknesses.

Perception in the autonomous driving stack

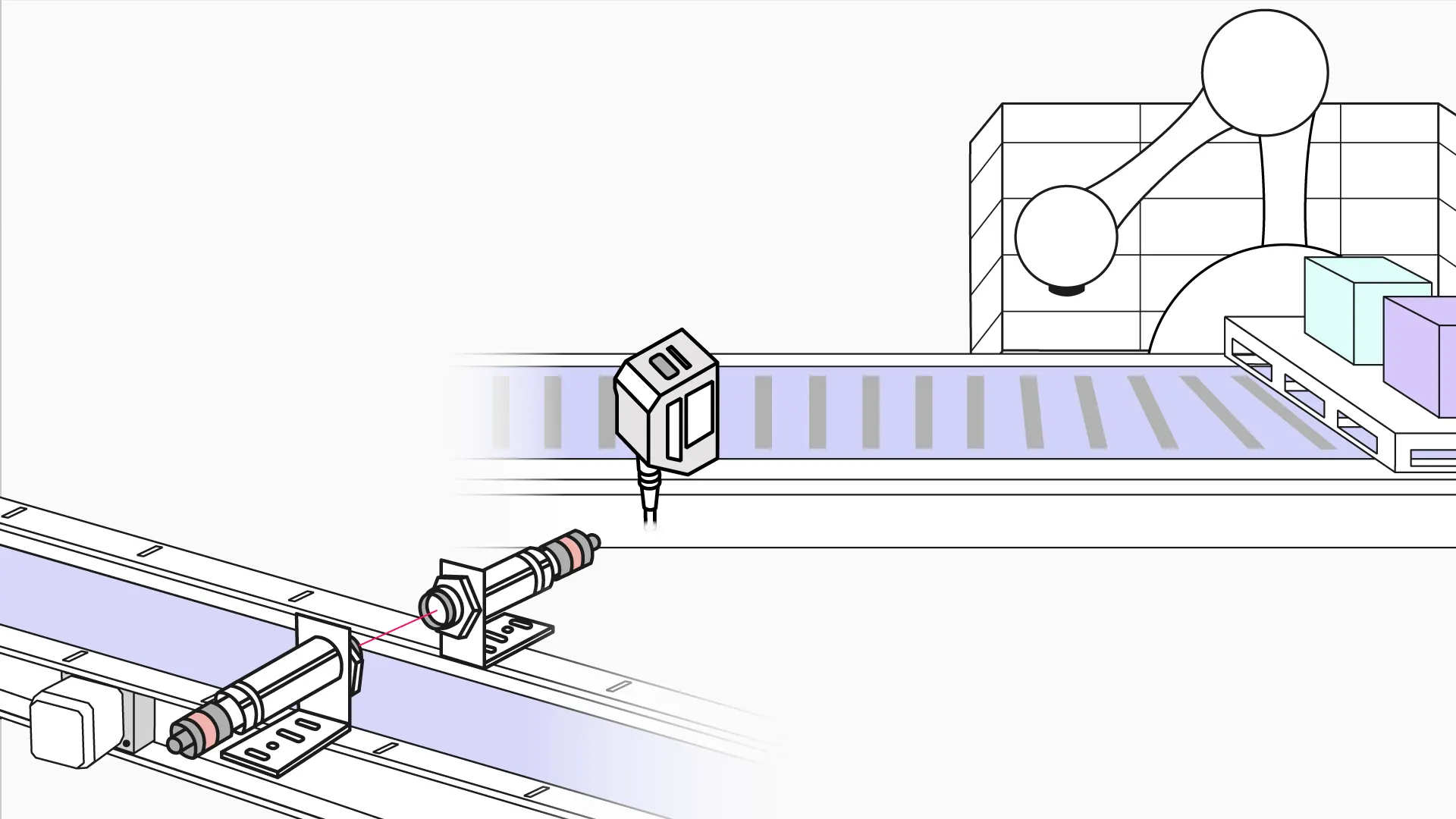

Autonomous driving progressively transfers driving capability and responsibility from humans to vehicles, typically via three core stages: perception, decision, and actuation. The perception stage acts like the vehicle's eyes and ears, using onboard sensors such as cameras, LiDAR, and millimeter-wave radar to detect the environment and collect data for the decision layer. The decision stage functions like the brain, processing incoming data on operating systems, chips, and compute platforms to output control instructions. The actuation stage is equivalent to limbs, executing commands for power delivery, steering, lights, and other vehicle systems. Perception is a prerequisite for intelligent driving: its accuracy, scope, and speed directly affect driving safety and strongly influence downstream decision-making and control.

Two camps: Pure-vision and LiDAR-centric systems

The industry is broadly split into two camps: pure-vision systems and LiDAR-based systems.

The vision camp argues that since humans drive using visual input plus brain processing, a camera array combined with deep learning neural networks and sufficient compute can achieve comparable performance. Recent examples include companies that have pursued pure-vision approaches and removed radar sensors from some configurations.

The LiDAR camp is represented by robotaxi companies that use mechanical LiDAR, millimeter-wave radar, ultrasonic sensors, and multiple cameras to achieve Level 4 commercial deployments.

From a product perspective, current pure-vision systems are often implemented at Level 2 in consumer vehicles. Companies using vision-based approaches emphasize an array of cameras covering 360 degrees, claiming a perception range larger than human vision, while acknowledging that real-world road scenarios still require further technical breakthroughs before autonomous driving becomes mainstream. Other vendors that introduced higher-level visual solutions initially intended for broader scenarios have sometimes scaled back to driver-assist levels, relying on multi-camera systems and deep learning networks to provide surround perception without high-line-count mechanical LiDAR.

How LiDAR works and why it matters

LiDAR is a sensor that precisely acquires 3D position information by emitting laser pulses and measuring distances to targets. By analyzing returned signal strength, spectral amplitude, frequency, and phase, LiDAR builds accurate 3D representations of objects. Its strengths in contour and angle measurement, illumination stability, and general obstacle detection have made it a key component for Level 4 and above autonomous systems.

3D LiDAR plays an important role in vehicle localization, path planning, decision-making, and perception. Between 2022 and 2025, many OEMs are expected to adopt LiDAR in production models. Internationally, some automakers announced production plans with suppliers, and in the Chinese market some OEMs selected LiDAR suppliers as standard equipment for certain models. As a result, LiDAR remains a common route for companies pursuing higher levels of autonomy.

Technical comparison: vision vs LiDAR

Cameras use image sensors that capture complex environments at high frame rates and high resolution at relatively low cost. However, cameras are passive sensors that rely on ambient light, so image quality and perception degrade in low-light or adverse lighting conditions.

LiDAR is an active sensor that emits pulsed laser light and measures scattered returns to obtain depth information. It offers high accuracy, long range, and strong anti-interference capability. LiDAR data tend to be sparse and unstructured, making them harder to use directly, and LiDAR cannot capture color or texture due to its monochromatic laser. Therefore, LiDAR is often used together with other sensors to provide complementary information. From a production perspective, current LiDAR perception is generally somewhat stronger than pure vision, and many OEMs and Tier 1 suppliers have relied on LiDAR to accelerate commercial deployment while avoiding some challenges in vision algorithms, compute, and mapping. Critics have cited LiDAR cost as a barrier to broad adoption.

Recent developments include automotive-grade, medium-to-long-range LiDAR products with full-scene ranges around 150 meters and wide fields of view, providing uniform horizontal and vertical beam distribution to generate stable point clouds that are favorable for back-end perception algorithms. Some suppliers have announced aggressive cost reductions.

LiDAR types and production considerations

LiDAR technologies are typically categorized as mechanical, hybrid (semi-solid-state), and solid-state. Each has tradeoffs. Mechanical LiDAR is mature and provides fast scanning and 360-degree coverage but tends to be bulky and expensive, complicating vehicle integration. Hybrid solid-state LiDAR can be lower cost and more amenable to mass production but may have limited fields of view. Pure solid-state LiDAR is considered a likely future direction, with optical phased array (OPA) and flash LiDAR as two technical routes, though further advances are needed for widespread production. At present, MEMS-based hybrid solid-state approaches appear to be a mainstream path toward automotive pre-installation and mass production.

Vision systems: software-centric approach

Vision-based systems produce data that closely resemble the human visual experience and follow a "lighter hardware, heavier software" paradigm. Camera hardware is inexpensive and more likely to meet automotive qualification at lower cost. High-resolution, high-frame-rate imaging provides rich scene information that is visually intuitive. However, cameras perform poorly in dark conditions and their safety and precision can decline at night or in adverse weather. The lowered hardware bar increases dependence on advanced algorithms, requiring substantial software capabilities for image processing, perception, and command generation.

At the ADAS stage where the driver retains decision authority, software demands are relatively modest and many OEMs adopted vision-based solutions. As autonomous driving advances to Level 3 and above, responsibility shifts to the vehicle platform and algorithmic requirements rise significantly. Currently, only a few companies with strong software and data capabilities have fully pursued or integrated vision-first solutions.

Pure vision solutions often operate as closed systems, and upgrading to Level 3 or higher is challenging for traditional automakers without strong software stacks. Some companies use "shadow mode" data collection to iteratively improve perception. Others deploy parallel strategies, using vision for production vehicles while equipping robotaxi fleets with LiDAR to improve reliability and perception performance. In cost-insensitive robotaxi deployments, some vendors have embraced LiDAR and collaborated with LiDAR suppliers to reduce costs while improving sensing capability.

Because 2D images struggle with certain depth cues such as a vehicle's rear corner and vehicle length, vision solutions must rely on algorithms to convert 2D images into accurate 3D models. Some companies combine large-scale, diverse real-world data collection with full-stack development to create advantages in vision fusion. Shadow-mode data collection can also control algorithm training costs by comparing predicted behavior with driver actions and sending discrepant instances for server-side retraining.

Multi-sensor redundancy remains common

Some companies known for vision-first approaches also integrate LiDAR into their robotaxi fleets, combining LiDAR, radar, and cameras to improve perception accuracy and vehicle reliability. At present, LiDAR and camera solutions have not definitively separated in performance, and multi-sensor redundancy is a conservative and common industry approach.

LiDAR cost trends and implications

Historically, LiDAR costs were very high, with single-unit systems costing tens of thousands of dollars. Recently, however, prices have fallen rapidly as LiDAR evolves toward solid-state designs and the wider supply chain matures. Some solid-state LiDAR technologies aim for sub-$200 price points at scale. Rapid cost reduction has been aided by the development of a robust supply chain in China, where multiple midstream suppliers and manufacturers have emerged.

One important reason many OEMs adopt LiDAR is that it enables faster deployment and provides safety redundancy when combined with other sensors. In the short term, vision-based systems based on deep learning may not cover all road conditions, leaving safety uncertainties that LiDAR can mitigate. Cost reduction for LiDAR undermines the pure-vision argument that cameras are the only economically viable path. However, pure-vision strategies also rely on large-scale user data, internal software development, and strong algorithmic capabilities to build a competitive position.

Some industry leaders have argued that when radar or LiDAR and vision disagree, vision should be trusted because it delivers orders of magnitude more bits per second of information. The argument is that radar must meaningfully increase signal-to-noise to justify fusion. Others note that manufacturers may still use LiDAR for labeling and benchmarking while pursuing vision-based perception for production.

Conclusion

Ultimately, safety and reliability are the primary criteria for vehicle driving. Given current technology, adopting sensor redundancy is a pragmatic approach to improve safety and user confidence. Both pure-vision and LiDAR-based approaches have advantages and disadvantages, and no single sensor is likely to solve every scenario. The prevailing industry view is that vehicles capable of Level 2 or above will incorporate multiple sensors with significant redundancy to ensure safety. Higher autonomy levels generally correlate with an increased number of sensors.

Industry statistics indicate that Level 2 systems may require 9–19 sensors, including ultrasonic sensors, long-range and short-range radar, and surround-view cameras. Level 3 could require 19–27 sensors and might include LiDAR and high-precision navigation and positioning. Current new-model offerings typically include numerous cameras, millimeter-wave radar, and ultrasonic sensors, reflecting a multi-sensor strategy. It is likely that different approaches will coexist: vision algorithms will continue to advance toward closer parity with human vision, while LiDAR technology and production will mature and cost-reduce to better meet OEM integration requirements.