1. Introduction

Intelligent vehicles (IV) use onboard sensor systems for active environment perception and exchange information with people, vehicles, and infrastructure through in-vehicle information terminals. They are a key component of intelligent transportation systems. The initial stage of autonomous intelligent vehicles is the Advanced Driver Assistance Systems (ADAS), which analyze vehicle safety and hazard states, perform interventions on braking, propulsion, and steering, and provide alerts to the driver via sound, images, lights, or haptic feedback.

The ADAS architecture represents a shift from passive to active vehicle safety. Its core is the environment perception system, typically composed of perception, decision, and actuation layers. This article focuses on the perception layer of ADAS and examines the technical characteristics and development trends of common sensors such as cameras, millimeter-wave radar, and LiDAR.

2. Environment Perception Sensors for Intelligent Vehicles

2.1 Vision Sensors

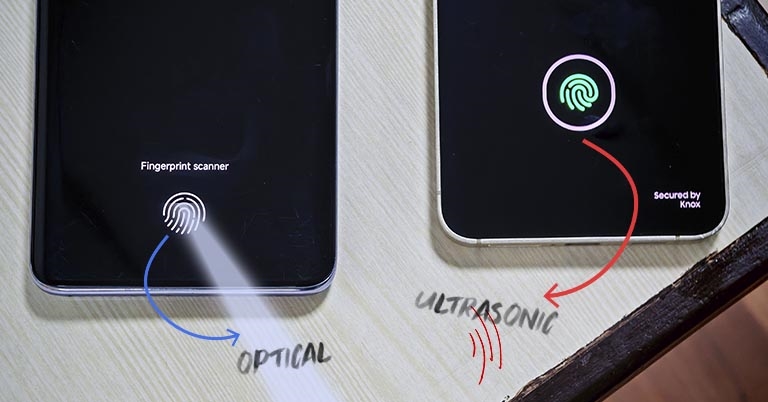

Vision sensors acquire images via cameras and extract key object features such as area, centroid, size, and orientation through image processing. They output data suitable for decision units and form the basis for many warning and recognition ADAS functions. Cameras are also essential for automated driving.

Based on principle and mounting configuration, in-vehicle vision sensors include monocular cameras, stereo/multi-camera systems, surround-view cameras, and infrared night-vision cameras.

Key features of vision sensors:

- Rich image information, capable of recognizing traffic signs, road markings, lane lines, and traffic lights.

- Cameras can assist positioning by matching road features to high-precision maps, providing strong environmental adaptability.

- When combined with machine learning and deep learning, cameras can achieve higher detection performance.

- Performance is strongly affected by weather; algorithms and compute requirements are high.

2.2 Millimeter-Wave Radar

Onboard millimeter-wave radar typically comprises a transmit module, receive module, signal processing module, and antennas. The radar antenna emits millimeter-wave signals and receives reflections from targets. The signal processing module derives surrounding environment information such as relative distance, velocity, angle, and motion direction. This information is used for target tracking and recognition. Combined with vehicle state data and processed by the vehicle ECU, the system can alert the driver or perform braking, propulsion, and steering interventions.

Millimeter-wave radar can be classified by operating principle into pulsed radar and frequency-modulated continuous-wave (FMCW) radar. Pulsed radar estimates target position from the time difference between transmitted and received pulses, but requires high-power pulses and isolation between transmit and receive hardware, leading to complex hardware design and higher cost. It is less common in automotive applications. FMCW radar, the mainstream automotive solution, uses Doppler effects and frequency-shift analysis between transmitted and reflected signals to compute relative position and velocity and supports multi-target detection with mature technology.

By detection range, millimeter-wave radar is divided into short-range (SRR), mid-range (MRR), and long-range (LRR) types. MRR and LRR currently tend to offer smaller size, higher single-chip integration, good performance, and lower development and material costs than older LRR solutions; MRR/LRR are expected to be major industry directions.

Key characteristics of millimeter-wave radar:

- Wide field of view and long detection range, with maximum ranges up to about 250 m.

- Good detection performance and imaging capability, not affected by object color or ambient temperature; can operate in extreme conditions.

- High sensitivity, fast response, good directionality, and relatively high low-altitude tracking accuracy.

- Small size, light weight, mature technology, relatively simple process, and high cost effectiveness.

- Strong anti-interference capability, little influence from ground clutter and noise.

- Coverage is fan-shaped with blind zones; cannot identify traffic signs and lights.

2.3 LiDAR

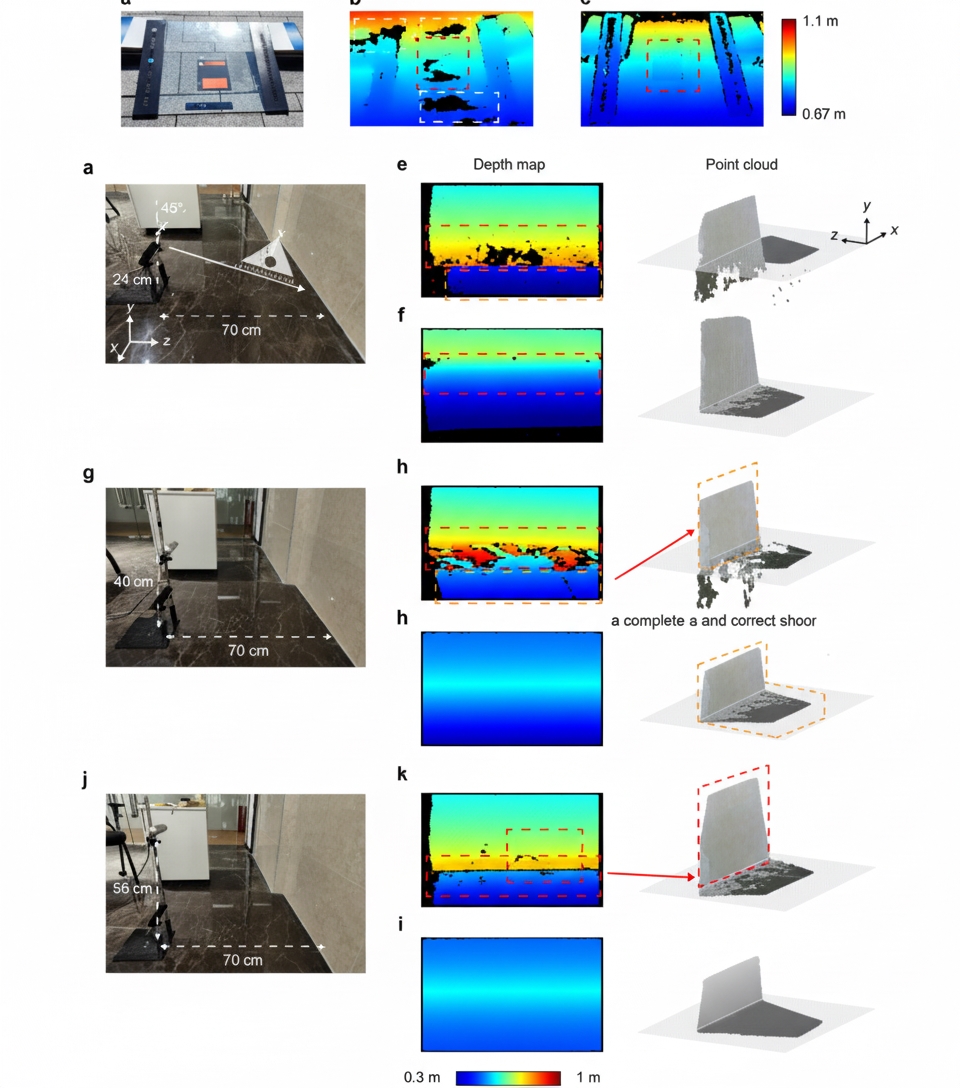

LiDAR (Light Detection and Ranging, LiDAR) uses laser emission and optoelectronic detection to perform remote sensing. By emitting laser pulses toward targets and comparing received and transmitted signals, LiDAR outputs point clouds that present precise 3D structural information of targets, yielding distance, azimuth, height, velocity, and pose information. LiDAR enables precise 3D reconstruction of the surrounding environment and is considered one of the most effective perception solutions for intelligent vehicles.

Typical LiDAR systems consist of a laser transmission subsystem, laser reception subsystem, information processing subsystem, and scanning subsystem. The laser driver periodically drives the laser to emit pulses; an optical beam controller adjusts the emission direction and number of beams; the transmit optics send the laser beams. Reflected signals are collected by the receive optics and detected by photodetectors, which forward information to the processing system. The processing system amplifies and converts signals and computes surface geometry and physical attributes to build object models. The scanning system changes the spatial projection direction of laser beams, enabling stable rotation or electronic steering to perform plane scanning and produce real-time maps for 3D modeling and localization.

By scanning mechanism, LiDAR is generally categorized as mechanical LiDAR, hybrid solid-state LiDAR, and solid-state LiDAR. Mechanical LiDAR uses rotating parts to steer the laser for full scanning. Hybrid solid-state LiDAR avoids full mechanical rotation by using internal rotating glass elements, MEMS mirrors, scanning mirrors, or prisms. Solid-state LiDAR uses electronic steering to change emission direction and mainly includes flash LiDAR and optical phased array (OPA) types.

By emission wavelength, mainstream 3D LiDARs use either 905 nm or 1550 nm. 905 nm systems use silicon receivers and semiconductor lasers, offering lower cost and smaller size; however, to avoid eye safety issues their emission power and detection range are limited. 1550 nm lasers do not damage the human retina at typical power levels, allowing higher emission power, longer range, stronger penetration, and less solar interference; they generally require fiber lasers and higher-cost detector materials.

Key characteristics of LiDAR:

- High resolution: distance resolution can be better than ±5 cm; speed resolution can track multiple targets within approximately 10 m/s.

- Wide detection range: detection distance can exceed 300 m; horizontal field of view can reach 360°, vertical up to about 40°.

- Rich information: LiDAR can detect surrounding vehicles and effectively identify uneven road surfaces, debris, and large static obstacles.

- All-weather operation: can work without external illumination.

- Good concealment and strong anti-interference capability: small emit aperture and narrow receive region reduce interference sources.

- Multi-line LiDAR is relatively costly and can be affected by heavy rain, dense smoke, or fog; LiDARs on the same frequency band can mutually interfere.

3. Performance Comparison of Environment Perception Sensors

Higher levels of automated driving require complementary strengths from multiple sensor types.

Each sensor type has its strengths and limitations. The current trend is to use multi-sensor data fusion to compensate for the shortcomings of individual sensors. Multi-sensor fusion enables more accurate and comprehensive environmental recognition, improving the safety and reliability of intelligent driving systems and is essential for higher-level autonomous driving. At the hardware level, fusion requires a sufficient number of sensors to ensure complementary functions, comprehensive information, high credibility, and strong recognition capability. At the software level, algorithms must provide fast computation, parallel processing, good compatibility and coordination, and high fault tolerance to ensure decision timeliness and accuracy.

4. Conclusion

This article provided an overview of common environment perception sensors for intelligent vehicles, including vision sensors, millimeter-wave radar, and LiDAR, and compared their technical characteristics. Compared with other sensor types, LiDAR provides direct 3D perception and can achieve real-time 3D sensing with less reliance on complex algorithms and data. LiDAR has advantages in detection accuracy, reliability, and anti-interference capability. Solid-state LiDAR solutions using 1550 nm wavelength and FMCW ranging are current development trends. In the short term, a "millimeter-wave radar + camera" combination is the most likely practical configuration, while in the longer term, as costs decline, "solid-state LiDAR + camera" has greater potential to become mainstream.

References

- Gui J., Wu X., Zeng Y., Fu Z. Development Status and Prospects of Intelligent Vehicles in China. China High-Tech Science, 2022(04):60-61.

- Zhang W. Technical Development Status and Trends of ADAS and Automotive Chips. Metal Functional Materials, 2022, 29(04):70-77.

- Zhan D. Status and Trends of Sensor Technology in Autonomous Vehicle Environment Perception Systems. Journal of Liaoning Vocational College of Transportation, 2021, 23(03):21-26.

- Cai S., Gao L. Research on Environment Perception Systems for Autonomous Vehicles. Automotive Parts, 2021(11):105-107.

- Xu B., Ma Z., Li Y. Research Progress and Application of Multi-Sensor Information Fusion Technology in Environment Perception. Computer Measurement and Control, 2022, 30(09):1-7+21.

- Cheng C., Song C., Wang P. Review on Development of Vehicle-Mounted LiDAR. Equipment Manufacturing Technology, 2022(05):247-251.

- Zhou W., Lu L., Wang J. Review of Multi-Sensor Information Fusion in Autonomous Driving. Automotive Abstracts, 2022(01):45-51.