Introduction

One classic problem in robot learning is sorting: extracting a target item from a pile of unordered objects. For a human parcel sorter this is nearly automatic, but for a robotic arm it implies complex matrix calculations.

A parcel sorter processing packages

Mathematical problems that take humans a long time to solve can be relatively easy for intelligent systems. However, the almost reflexive sorting actions that humans perform are a major focus for robotics researchers worldwide.

Perception and Pose

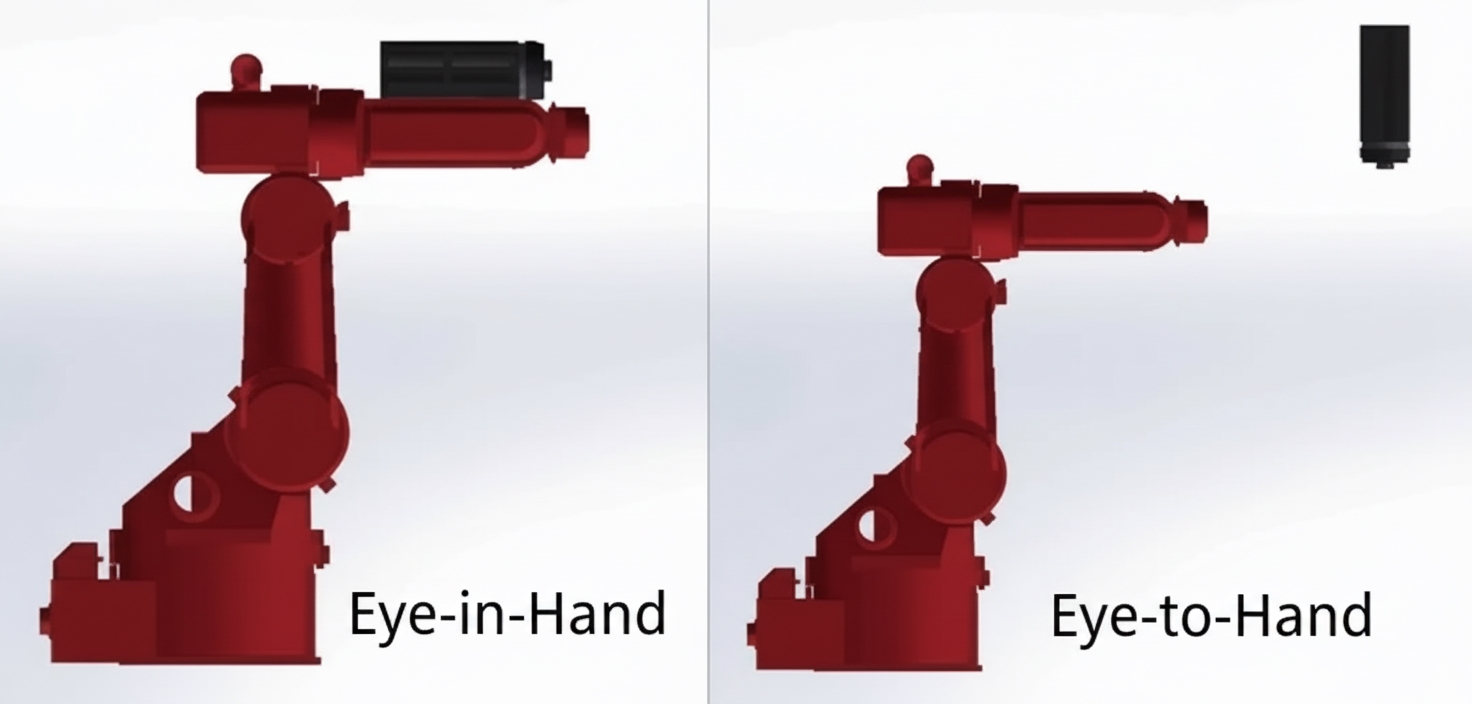

Robotic grasping requires determining the pose of each segment of the manipulator. First, the manipulator needs a visual servoing system to determine object positions. Based on the relative position between the end effector (hand) and the vision sensor (eye), systems can be categorized, for example, as Eye-in-Hand.

Eye-in-Hand mounts the vision sensor on the manipulator. The field of view changes with the arm's motion. Accuracy increases as the sensor gets closer, but getting too close may move the target out of view.

Precise vision and a flexible manipulator must work together to complete a successful grasp. The core problem is: find a suitable grasp point (or suction point) and hold it. Subsequent transport is part of motion planning.

Main Approaches

Model-based

This approach assumes a known object model. The object is scanned in advance and its model data are provided to the robot system, so the robot needs fewer computations during actual grasping. Typical steps:

- Online perception: use RGB images or point clouds to compute each object's 3D pose.

- Compute grasp points: in the real-world coordinate frame, select the best grasp point for each object while considering collision avoidance and other constraints.

RGB color space is composed of red, green, and blue channels. Any color can be represented as a combination of these three channels, and robots use color coordinate values to interpret color, similar to human vision and common display systems.

CGrasp unordered grasping of precision bearings

Half-model-based

This training approach does not require complete prior knowledge of every object, but it does require many similar objects to train algorithms so they can effectively segment images and identify object boundaries. Typical steps:

- Offline: train image segmentation algorithms so pixels are grouped by object. This work is usually done by data annotators who label large datasets according to engineering requirements.

- Online: apply the trained segmentation to incoming images and search for suitable grasp points on the segmented objects.

This approach is currently widespread. Progress in image segmentation has advanced quickly and has helped drive development in robotics and autonomous driving.

Model-free

Model-free methods do not rely on explicit object models. The robot learns grasping strategies directly by sampling antipodal points, i.e., points that are potentially graspable. Training typically uses large-scale self-supervised trials where manipulators repeatedly attempt grasps on varied objects. Large-scale grasp farms are representative examples.

It is important to note that different object shapes present widely varying grasping difficulty. Even objects with the same shape can be much harder or easier to grasp depending on surface reflectivity and environmental lighting. There is still a significant gap between laboratory results and commercial deployment.

Challenges and Trends

High-precision camera development is the first step for robotic perception. In commercial scenarios, the most troublesome object is often the next unknown object. For industrial robots to integrate into real production systems, they need intelligent controllers that can make flexible adjustments for different operating conditions, which will broaden their application scenarios.