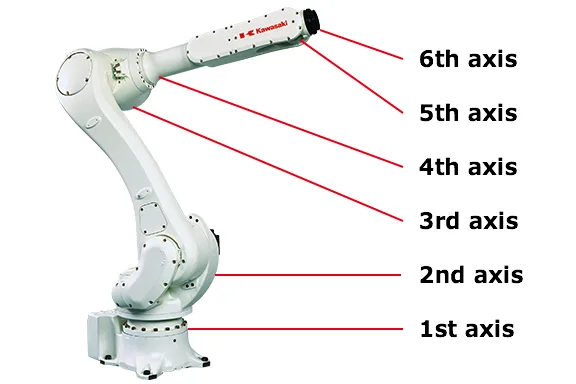

What a hand-eye system is

A hand-eye system describes the relationship between a camera and a robot arm so the arm can grasp a target seen by the camera. If we label the brain as B, the eye as A, and the hand as C, and the A-B and B-C relationships are known, then the C-A relationship is determined. In practice, this means determining the coordinate transformation between the camera's pixel coordinate system and the robot arm's spatial coordinate system.

A camera measures pixel coordinates, while the robot arm operates in a spatial coordinate frame. Hand-eye calibration derives the transformation between these two coordinate systems.

In a control loop, once the camera detects a target's pixel position, the calibrated transformation matrix is used to convert the camera pixel coordinates into the robot arm's coordinate frame. The robot controller then computes the required motor motions based on the robot coordinate values so the arm reaches the specified position. This workflow typically involves image calibration, image processing, forward and inverse kinematics, and hand-eye calibration.

Nine-point calibration

One common calibration method is nine-point calibration.

Nine-point calibration directly establishes the coordinate transformation between the camera and the robot. Move the robot end effector to nine positions and record their coordinates in the robot coordinate system. Simultaneously detect the nine points in the camera image to obtain their pixel coordinates. This yields nine pairs of corresponding coordinates. From the transformation equation below, at least three non-collinear points are required to solve for the calibration matrix.

Example using Halcon

Below is an example workflow using Halcon operators and C# for a nine-point calibration.

% Previously obtained image coordinates area_center(SortedRegions,Area,Row,Column) % Column_robot := [275,225,170,280,230,180,295,240,190] % Robot end effector column coordinates for 9 points Row_robot := [55,50,45,5,0,-5,-50,-50,-50] % Robot end effector row coordinates for 9 points vector_to_hom_mat2d(Row,Column,Row_robot,Column_robot,HomMat2D) % Solve transformation matrix, HomMat2D is the relation between image coordinates and robot coordinates

Applying the calibration

affine_trans_point_2d(HomMat2D,Row2,Column2,Qx,Qy) % Compute coordinates in robot base frame from pixel coordinates and the calibration matrix

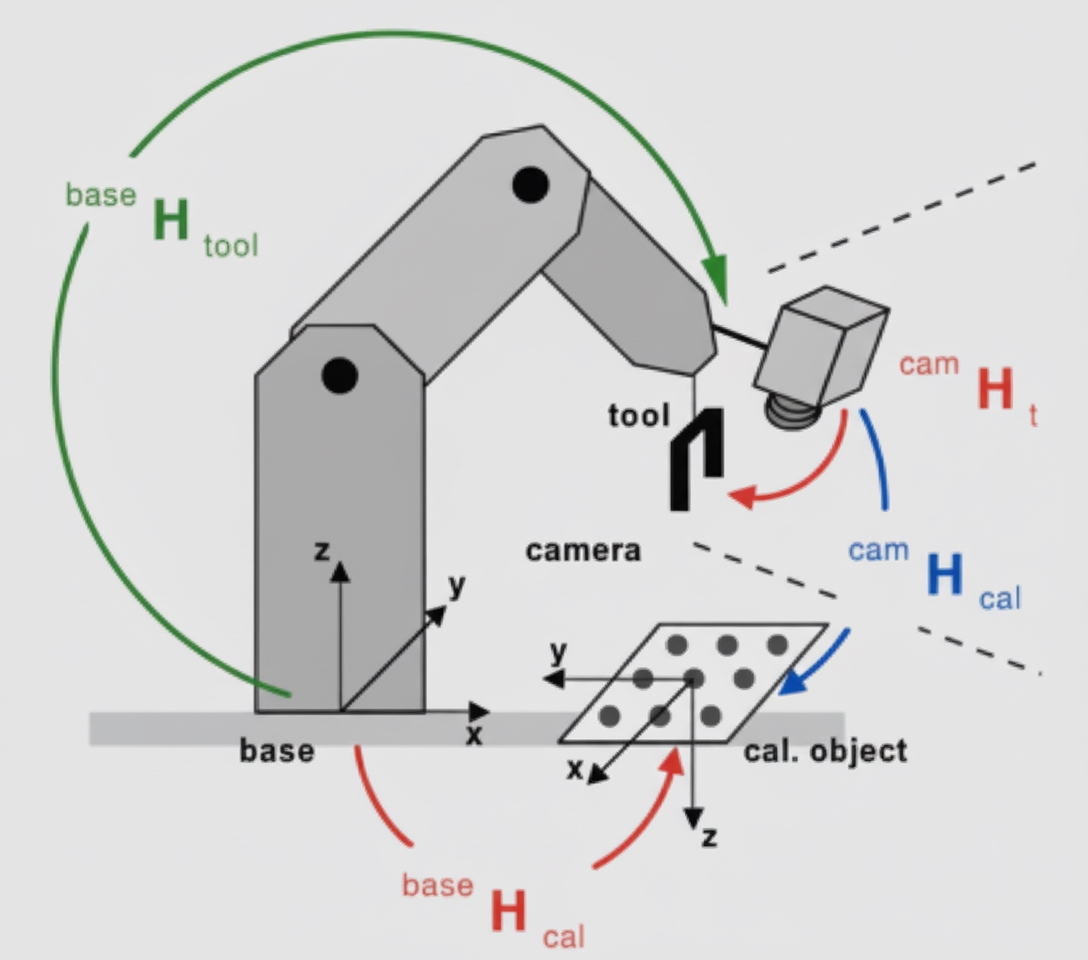

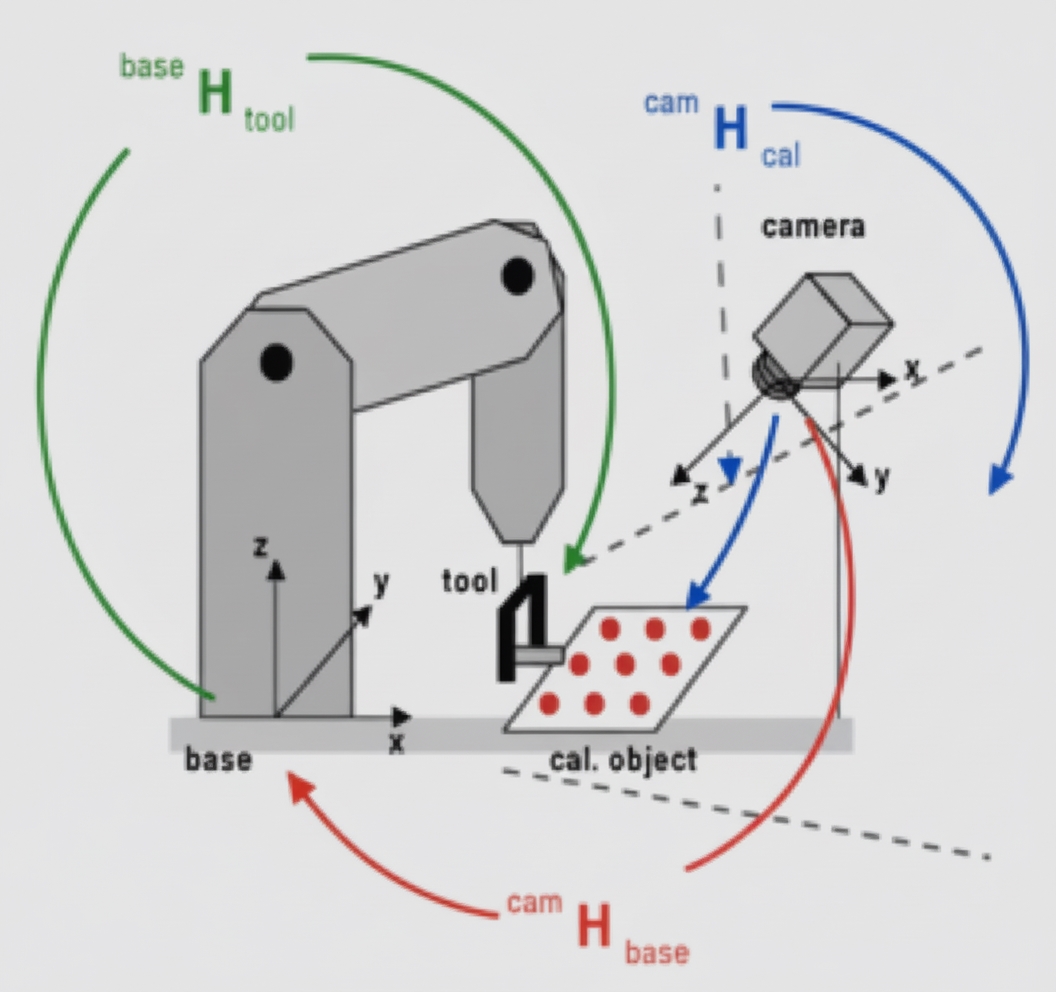

Special cases: eye-to-hand and eye-in-hand

There are two common configurations.

Eye-to-hand: the camera is fixed in the environment. The camera images the target, the system computes the target position in the robot frame, and the robot moves to grasp the object. This configuration is straightforward to understand and implement.

Eye-in-hand: the camera is mounted on the robot end effector. Calibration for this configuration can be performed with the same procedures as for a separated camera and robot. During calibration, the robot moves to the poses used for camera calibration and the camera acquires images at those poses. The calibration then yields the relationship between the camera and the robot. Many systems with the camera mounted on the end effector use this approach, so the calibration process for eye-in-hand can be treated similarly to that for an eye-to-hand setup.