Overview

InfiniBand (IB) is an advanced networking standard established by the InfiniBand Trade Association (IBTA). It is widely used in high performance computing (HPC) because it provides high throughput, bandwidth, and low latency for network transport.

InfiniBand provides critical data connectivity both inside and between compute systems. Whether connected via direct links or through switches, InfiniBand enables high-performance transfers between servers and storage. The scalability of InfiniBand networks supports horizontal scaling via switching fabrics to meet diverse networking needs. With the rapid growth of scientific computing, artificial intelligence (AI), and cloud data centers, InfiniBand is increasingly used for end-to-end high-performance interconnects in HPC supercomputing deployments.

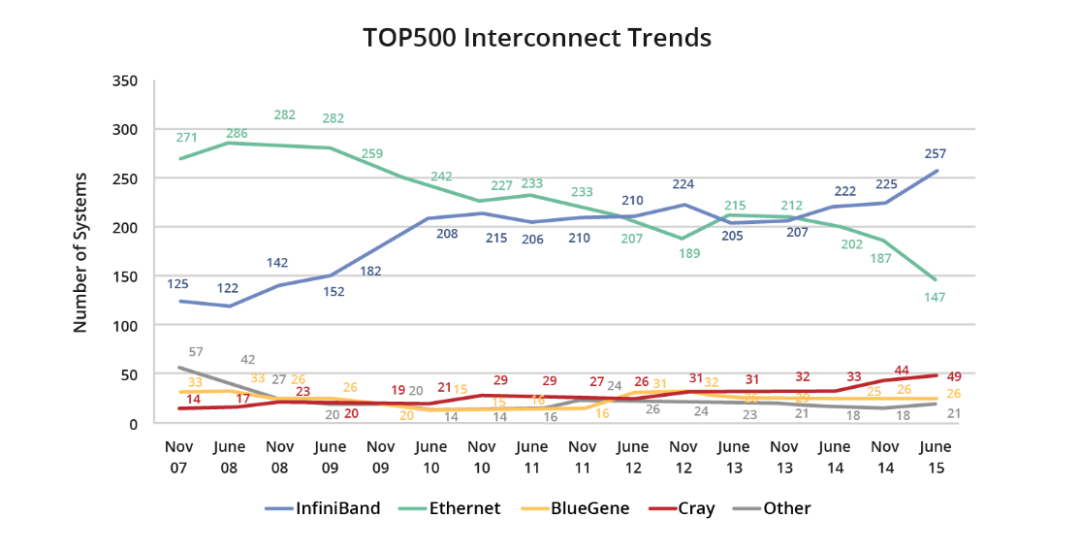

In June 2015, InfiniBand accounted for 51.8% of systems on the Top500 list, a year-over-year increase of 15.8%.

In the June 2022 Top500 list, InfiniBand again led as the primary interconnect for supercomputers in both count and performance. Key trends included:

- InfiniBand-based supercomputers led with 189 systems.

- InfiniBand-based systems dominated the top 100 with 59 systems.

- NVIDIA GPU and networking products, notably Mellanox HDR Quantum QM87xx switches and BlueField DPUs, served as dominant interconnects in over two-thirds of those systems.

Beyond traditional HPC, InfiniBand is used in enterprise data centers and public cloud environments. Leading systems and cloud services use InfiniBand networks to provide high-performance interconnects.

In the November 2023 Top500 list, InfiniBand maintained a leading position, underscoring its continued growth driven by performance advantages.

InfiniBand Network Advantages

InfiniBand is regarded as a forward-looking standard for high performance computing interconnects, with strengths in supercomputer networking, storage, and HPC LAN connections. Its advantages include simplified management, high bandwidth, full CPU offload, ultra-low latency, cluster scalability and flexibility, quality of service (QoS), and SHARP support.

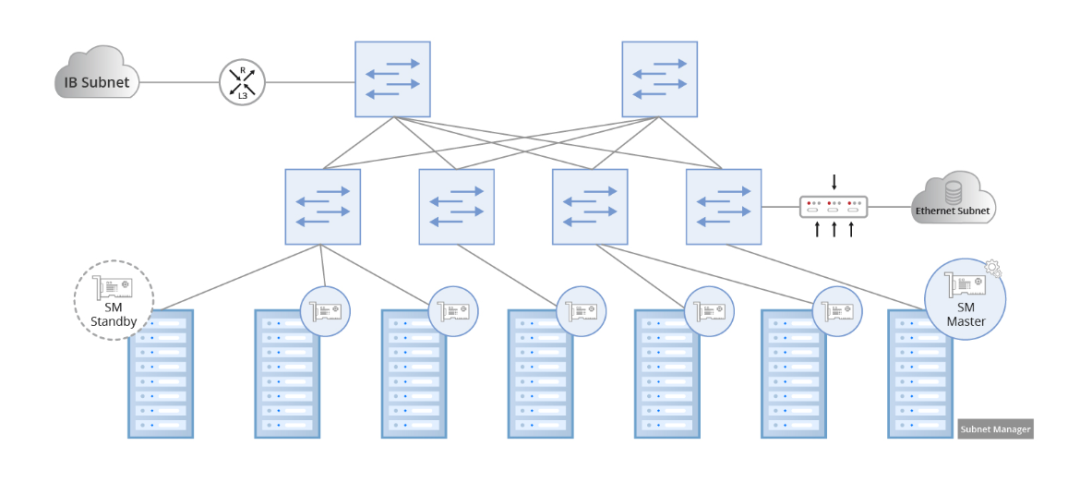

Network Management

InfiniBand was designed for software-defined networking (SDN) architectures and is managed by a subnet manager. The subnet manager configures the local subnet and ensures seamless operation. To manage traffic, all channel adapters and switches implement a subnet management agent (SMA) to interact with the subnet manager. At least one subnet manager is required to perform initial setup and reconfiguration when links are established or torn down. An arbitration mechanism designates a primary subnet manager, while others operate in standby mode. Standby managers maintain backup topology information and monitor subnet health, taking over if the primary fails to ensure uninterrupted subnet management.

Higher Bandwidth

InfiniBand has historically offered higher link rates than Ethernet, driven by its role in server interconnects for HPC. Earlier rates included 40Gb/s QDR and 56Gb/s FDR. More recent InfiniBand rates such as 100Gb/s EDR and 200Gb/s HDR are widely adopted in supercomputers. The introduction of large-scale AI models has led some organizations to consider 400Gb/s NDR InfiniBand products, including NDR switches and optical cabling.

Common InfiniBand rate abbreviations:

- SDR - Single Data Rate, 8Gbps.

- DDR - Double Data Rate, 10Gbps/16Gbps.

- QDR - Quad Data Rate, 40Gbps/32Gbps.

- FDR - Fourteen Data Rate, 56Gbps.

- EDR - Enhanced Data Rate, 100Gbps.

- HDR - High Data Rate, 200Gbps.

- NDR - Next Data Rate, 400Gbps.

- XDR - Extreme Data Rate, 800Gbps.

Efficient CPU Offload

CPU offload is essential for maximizing compute performance. InfiniBand facilitates data transfer with minimal CPU involvement by:

- Offloading the entire transport protocol stack to hardware.

- Enabling kernel bypass and zero-copy transfers.

- Using RDMA (remote direct memory access) to move data directly between server memories without CPU involvement.

GPUDirect enables direct access to GPU memory and accelerates transfers from GPU memory to other nodes, improving performance for AI, deep learning training, and machine learning workloads.

Low Latency

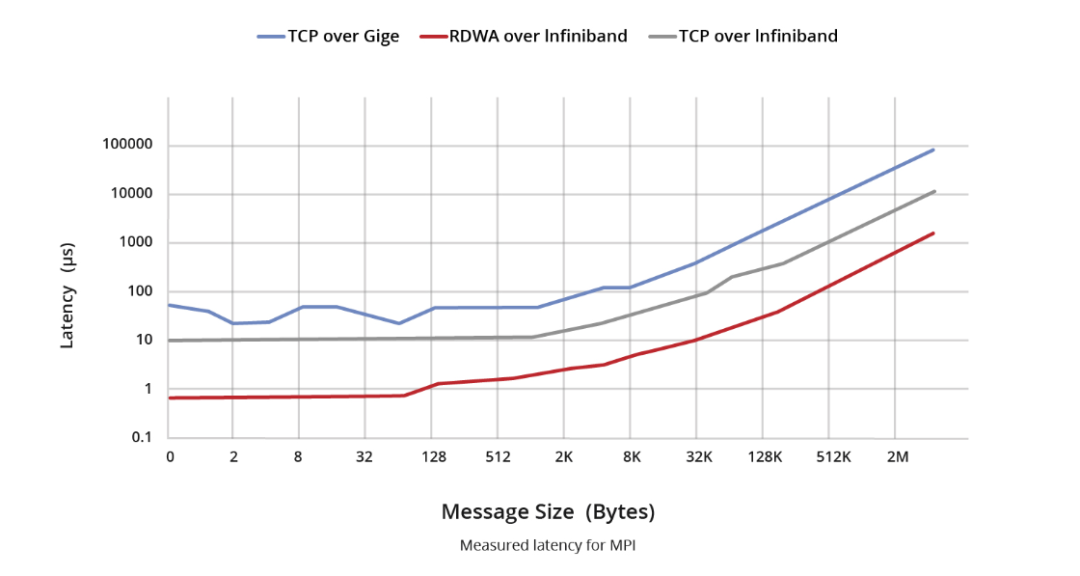

Latency differences between InfiniBand and Ethernet can be viewed in two parts. At the switch level, Ethernet switches operate at Layer 2 using MAC table lookups and store-and-forward mechanisms, and may perform additional processing for IP, MPLS, QinQ, and other services, leading to microsecond-scale processing delays (some passthrough implementations can achieve sub-200ns). InfiniBand switches simplify Layer 2 processing by using 16-bit LID forwarding information. Passthrough techniques can reduce forwarding latency below 100ns, outperforming typical Ethernet switch rates.

At the host adapter level, RDMA eliminates CPU traversal for message forwarding, reducing encapsulation and decapsulation overhead. Typical InfiniBand adapter send/receive latencies (write, send) are around 600ns, while Ethernet TCP/UDP-based send/receive latencies often hover near 10us, resulting in an order-of-magnitude latency advantage for InfiniBand.

Scalability and Flexibility

InfiniBand can deploy up to 48,000 nodes in a single subnet, forming a large Layer 2 fabric. InfiniBand avoids broadcast mechanisms like ARP, preventing broadcast storms and associated bandwidth waste. Multiple InfiniBand subnets can be connected via routers and switches, supporting diverse topologies.

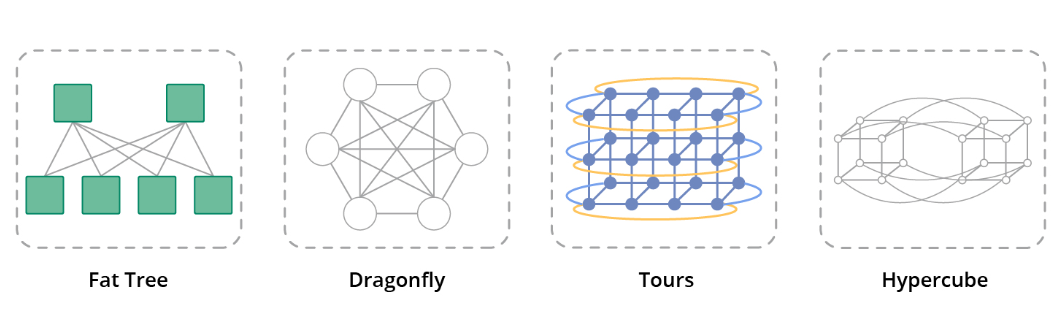

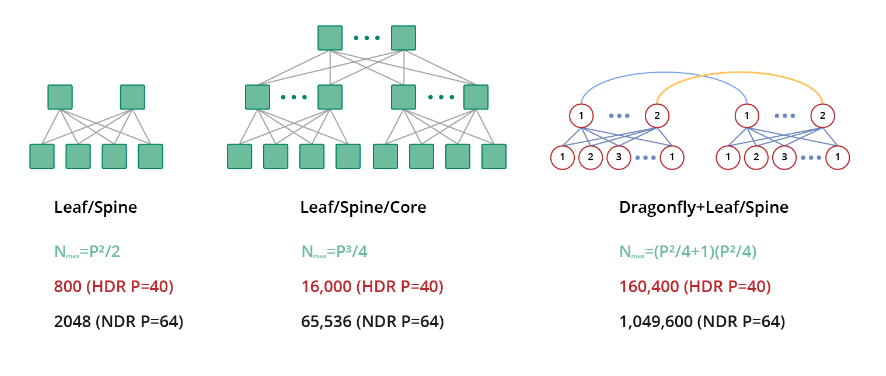

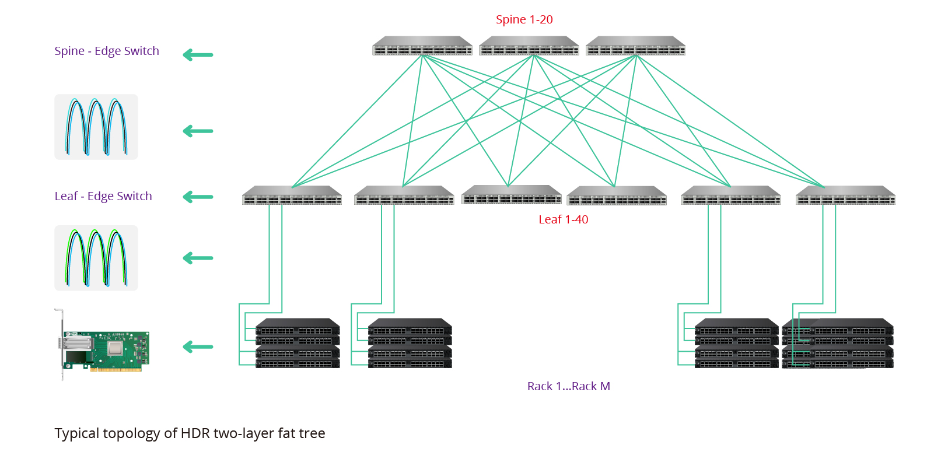

For smaller deployments, a two-layer fat-tree topology is common; larger deployments often use a three-layer fat-tree. At very large scales, cost-effective Dragonfly topologies can improve scalability.

Quality of Service (QoS)

When multiple applications with different priorities share a subnet, QoS becomes important. InfiniBand implements QoS via virtual lanes (VLs). VLs are discrete logical channels sharing a physical link. Each VL can support up to 15 standard virtual lanes plus one management lane (VL15). This mechanism isolates traffic by priority and enables prioritized forwarding for high-priority applications.

Stability and Resilience

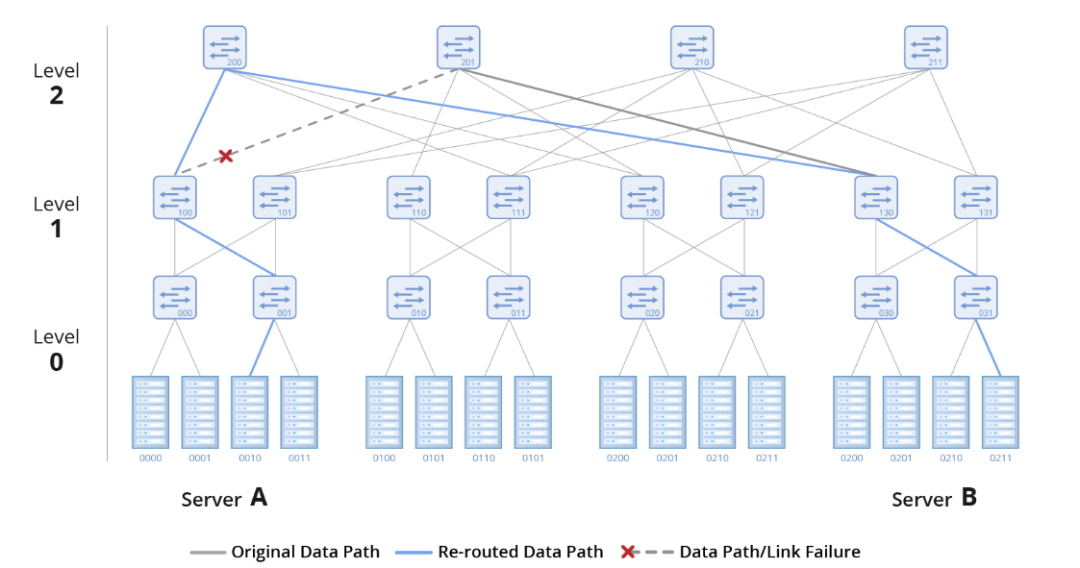

Networks inevitably experience faults during long-term operation. InfiniBand switches include hardware mechanisms for rapid recovery, often called self-healing network features embedded in switch hardware. These mechanisms enable fast link recovery from failures, minimizing downtime.

Optimized Load Balancing

Maximizing fabric utilization requires load balancing. InfiniBand uses adaptive routing to distribute traffic across available ports. Adaptive routing is hardware-supported and managed by an adaptive routing manager.

When adaptive routing is active, the switch queue manager monitors traffic on egress ports, balances load across queues, and routes traffic to underutilized ports. This dynamic balancing prevents congestion and optimizes bandwidth utilization.

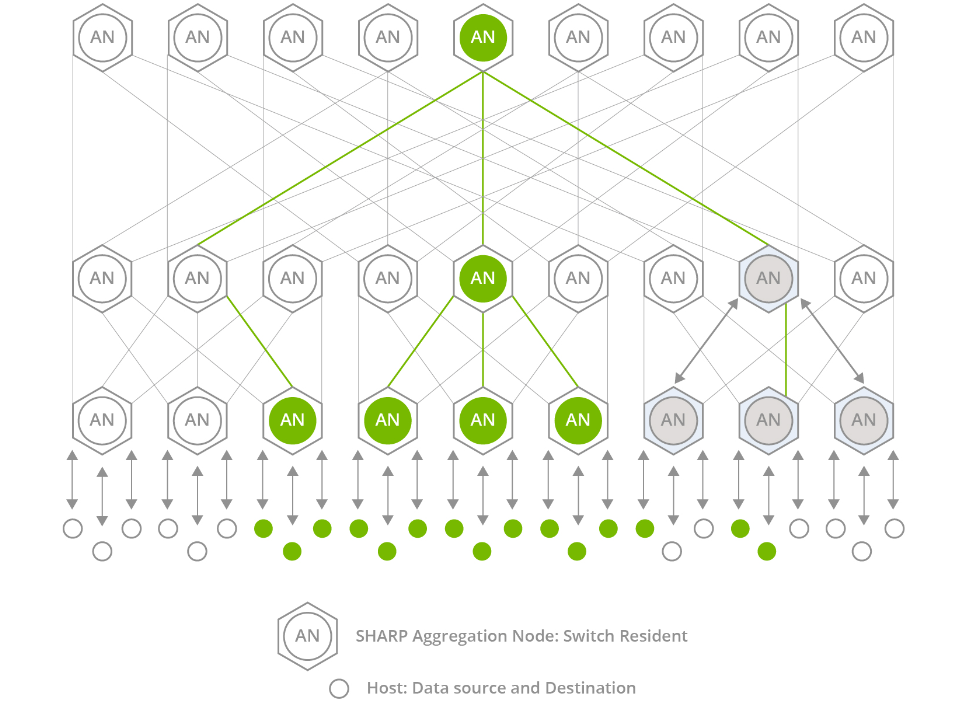

Network Offload: SHARP

InfiniBand switches can implement SHARP, the Scalable Hierarchical Aggregation and Reduction Protocol. SHARP is integrated into switch hardware to offload aggregation communication tasks from CPUs and GPUs to the switch. By eliminating redundant data transfers between nodes, SHARP reduces the volume of data traversing the fabric and improves performance for collective operations commonly used in MPI workloads, AI, and machine learning.

Supported Topologies

InfiniBand supports various topologies, including fat tree, torus, Dragonfly+, hypercube, and HyperX, to meet different goals such as scalability, lower total cost of ownership, minimized latency, and extended reach.

InfiniBand's technical capabilities simplify high-performance fabric architectures and reduce latency introduced by multi-tier hierarchies. Its high bandwidth, low latency, and interoperability with Ethernet make it applicable across a range of scenarios.

InfiniBand HDR Product Summary

As client needs evolve, 100Gb/s EDR is being phased out. HDR offers flexibility with HDR100 (100G) and HDR200 (200G) and is widely adopted. NDR at 400Gb/s is available for scenarios requiring higher link rates.

InfiniBand HDR Switches

NVIDIA provides HDR InfiniBand switches in two main formats. The modular CS8500 chassis offers up to 800 HDR 200Gb/s ports in a 29U chassis. Each 200G port can split to 2x100G, supporting up to 1600 HDR100 ports. The QM87xx fixed switches are 1U units with 40 QSFP56 200G ports on the front panel. These ports can be split into up to 80 HDR100 ports to connect 100G HDR NICs. Each port also supports backward compatibility to EDR rates for connecting 100G EDR NICs. Note that a single 200G HDR port can step down to a 100G EDR connection but cannot split into two 100G EDR ports for connecting two EDR NICs.

Two QM87xx models are available: MQM8700-HS2F and MQM8790-HS2F. Their difference is management method: the QM8700 includes an out-of-band management port, while the QM8790 is managed via NVIDIA UFMR.

Each model offers two airflow options. The MQM8790-HS2F uses P2C (power-to-cable) airflow and is identified by blue markers on the fan modules. MQM8790-HS2R uses C2P (cable-to-power) airflow with red markers on the fan modules. Airflow direction can also be verified by feeling the intake and exhaust at the switch.

QM8700 and QM8790 switches are commonly used for two connection types: direct connection to 200G HDR NICs using 200G to 200G AOC/DAC cables, or connection to 100G HDR NICs by splitting a physical 200G (4x50G) QSFP56 port into two virtual 100G (2x50G) ports using a 200G-to-2x100G cable. After splitting, port symbols change from x/y to x/Y/z where x/Y indicates the original port and z identifies the sub-port (1 or 2).

InfiniBand HDR Network Interface Cards (NICs)

HDR NICs are available in multiple configurations. Rate options include HDR100 and HDR200.

HDR100 NICs support 100Gb/s. Two HDR100 ports can connect to an HDR switch via a 200G-to-2x100G cable. Compared with 100G EDR NICs, HDR100 100G ports support both 4x25G NRZ and 2x50G PAM4 signaling.

200G HDR NICs support 200Gb/s and can use 200G direct attach cables to connect to the switch.

For each rate, NICs are available in single-port, dual-port, and different PCIe form factors. Common IB HDR NIC models are available from multiple vendors.

HDR InfiniBand architectures offer clear design choices across switch, NIC, and cabling options. For 100Gb/s, solutions include 100G EDR and 100G HDR100. For 200Gb/s, options include HDR and NDR200. Switches, NICs, and accessories differ by vendor and application, supporting data center, HPC, edge compute, and AI use cases.

Comparisons: InfiniBand, Ethernet, Fibre Channel, and Omni-Path

InfiniBand vs Ethernet

- Different roles: InfiniBand and Ethernet are key communication technologies for data transport, each suited to different use cases.

- Historical rates: InfiniBand rates have scaled from SDR 10Gb/s and have exceeded early gigabit Ethernet rates.

- Current leadership: InfiniBand supports 100G EDR and 200G HDR and is evolving to 400G NDR and 800G XDR.

- Latency: InfiniBand meets stricter latency targets and approaches near-zero latency for certain operations.

- Ideal use: InfiniBand excels where fast, precise data movement is required, such as supercomputing, large-scale data analytics, machine learning training and inference.

- Ethernet role: Ethernet is known for reliability and is appropriate for stable, consistent LAN data transport.

The primary differences are rate and latency characteristics. InfiniBand is often preferred in HPC fabrics where fast data movement is crucial, while Ethernet is suitable for general LAN connectivity and reliable transport.

InfiniBand vs Fibre Channel

Fibre Channel is primarily used in storage area networks (SANs) to connect servers, storage arrays, and clients with high-speed, reliable links. Fibre Channel offers dedicated, secure channels optimized for storage use. The main distinction between InfiniBand and Fibre Channel is the typical data type they support: Fibre Channel focuses on storage traffic in SANs, while InfiniBand is often used to connect CPUs, memory, clusters, and I/O controllers for compute-centric environments.

InfiniBand vs Omni-Path

Both Omni-Path and InfiniBand have been used for high-performance data center fabrics at 100Gb/s. Although similar in performance, their fabric designs differ. For example, a 400-node cluster might require fewer InfiniBand Quantum 8000-series switches and specific cabling than an Omni-Path deployment, which can require more switches and additional active optics. InfiniBand EDR solutions often present advantages in device cost, operations, and power consumption compared with some alternatives, making them a practical option for many HPC deployments.